Welcome back to the second part of our chatbot mini-series. If you missed our first article, and want to know more about the chatbot landscape and their relevance in today’s markets, you can find Part I here.

In this article we’ll be diving into the inner workings of a chatbot, focusing on the Natural Language Understanding (NLU) component. For those unfamiliar with it, NLU is a subfield of Natural Language Processing (NLP) where Artificial Intelligence (AI) is used to attempt to comprehend the meaning of written sentences or speech, and its developments have allowed chatbots to become better at understanding us. We’ll be taking a step-by-step approach on how to get started with NLU using IBM’s Watson Assistant to illustrate a practical example below. If you’re up for it, give it a try and tag along, there’s a free tier 😉

As before, at the end of this article, there’ll also be a few insights & tips about the subjects covered…but enough of this, let’s get started! 💪

What makes chatbots tick?

A chatbot sees a conversation as single rounds of back and forth requests & responses happening over and over. In each of these rounds, the state and context of the conversation are changing, but we can break down each round into a few main steps:

- Receive the user’s input

- Transform it into a standard data structure our chatbot can process (NLU)

- Decide the path and actions to take based on the conversation state, context and request (Dialog)

- Get the response back to the user

In this article, we’ll take a closer look at the first two steps and we’ll cover the remaining steps on Part III.

Step 1: Receive the user’s input

The first thing you need to be able to do is to receive messages from your users. Depending on which channel you’re going for (Facebook Messenger, Google Actions, etc.), this might involve implementing an SDK or, in most channels, just registering a webhook to receive new message events from conversations with your chatbot. We won’t focus too much on this step as each platform has its detailed instructions on how you can set this step up, but an example of what you might get on each event originating from Facebook chat can be seen here.

Step 2: NLU

Once you have access to the user’s request, you need to transform its input into something that your chatbot will understand. This is where NLU comes into play. You can use it to take the incoming message and transform it into a standard data structure that you can build a dialog tree around.

The typical outcome of NLU processing is a set of Intents and Entities. Here’s an example :

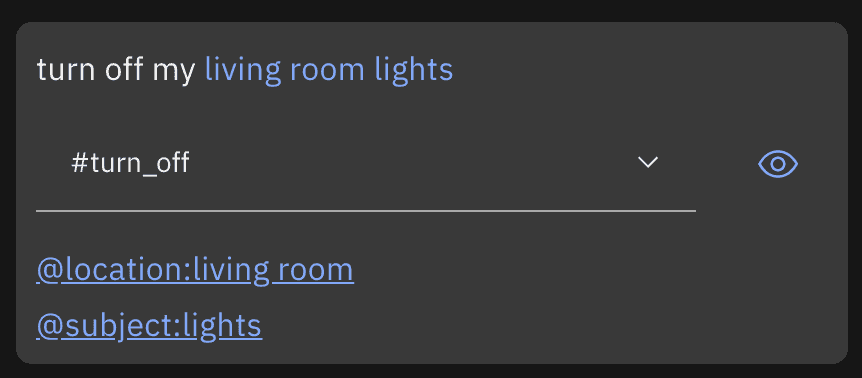

User input: “Turn off my living room lights”

NLU processing outcome:

Intents: turn_off

Entities :

- location: living room

- subject: lights

Intents attempt to categorise the action associated with the input, while entities allow you to get additional contextual information from the sentence. You can think of them as the whats, the hows, the whens, and so forth.

Note that by default, a blank NLU model won’t be able to understand these things for any inputs, although NLU services will usually allow you to add recognition for some things by default, including common intents (greetings, goodbyes, …) and entities (dates, times, locations, numbers, …). Still, once you start building your chatbot, you’ll need to decide what you want it to be able to understand and train your NLU model to recognise it.

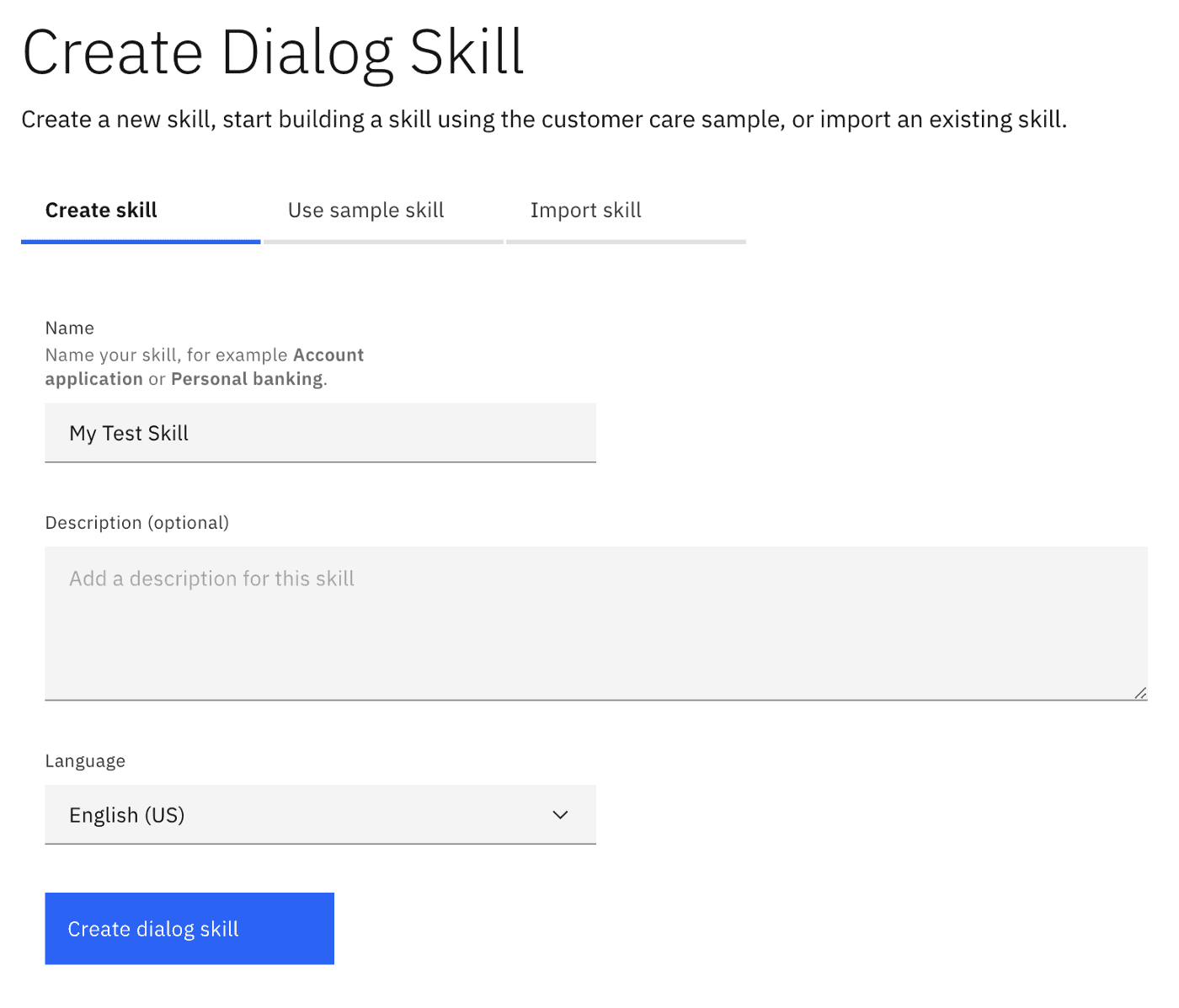

Let’s see how you can do that by creating an NLU model capable of understanding requests to turn things off, replicating the example above. To get started, let’s create a blank dialog skill in Watson Assistant:

To teach it how to understand an intent, we need to provide it with different examples of what people might typically say to achieve that goal. In this case, let’s create the turn_off intent — a way of users asking to turn something off — with the following examples:

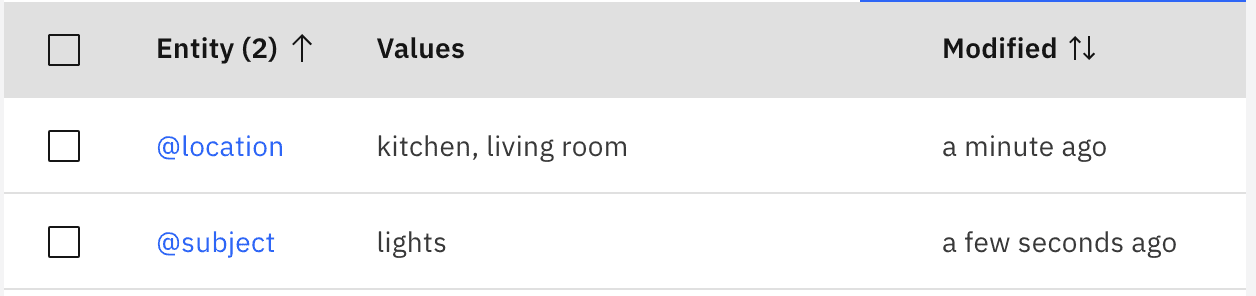

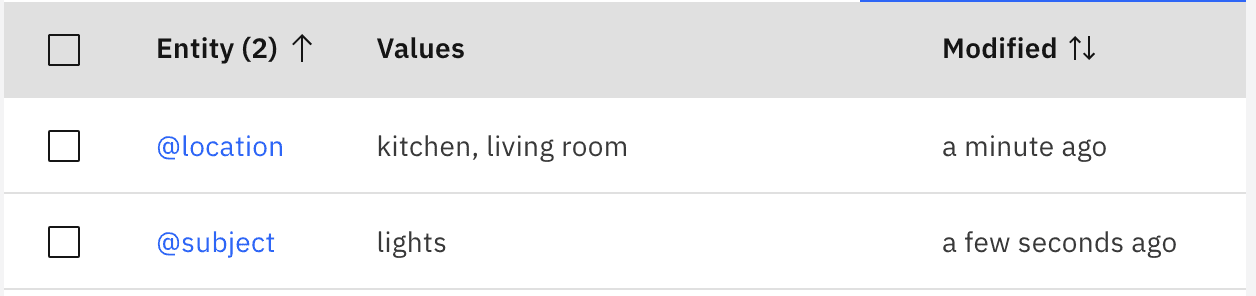

For a production rollout we could certainly add more but for the purpose of this example, these will be enough. With the intent out of the way, we also have to teach it about the two entities we want it to look for: locations and subjects. These are the things we want to look out for in requests to “turn something off”. We want to know what to turn off, and potentially, from which location. To do this, let’s create both entities and specify the values they could take:

Note that these could take more values, but for now, we’ll assume our chatbot’s goal is just to be able to understand requests related to lights in our kitchen and our living room. To improve their recognition, we also add a few synonyms for each value:

When specifying your entities, always take a few moments to think of synonyms that people might use to refer to them, as they represent a quick and easy improvement to your chatbot’s capability to understand natural requests. In this example, we’ve added lamp, bulb and chandelier as synonyms that people might use to refer to when asking to turn off the lights. Note that in Watson, entities will fuzzy match values by default, so we don’t need to add plurals or typos for these.

Ok! We’ve created the turn_off intent and the two entities we needed so our NLU model should now be able to:

- Understand when a user asks to turn something off

- Be able to identify any subject and location included in those requests

Let’s give it a go! 🤞🏻

Success 🎉 Still, you might be thinking…

“What’s so special about that? That looks just like regular string matching”

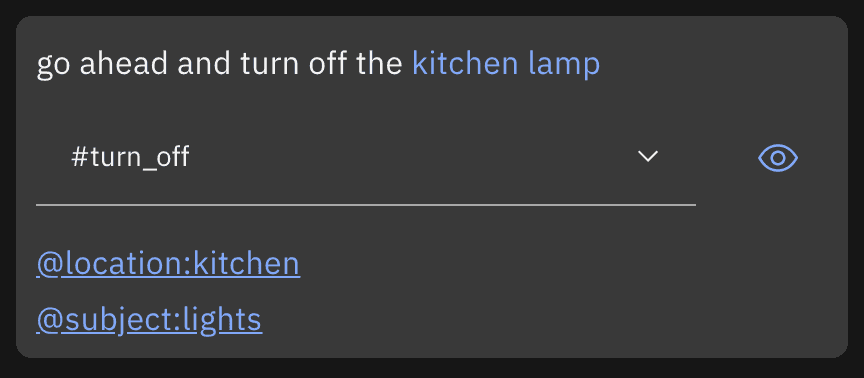

The magic of NLU is that through machine learning, even if the user goes for a different utterance we can still get the same intent and entities returned to us, without ever explicitly teaching the model that sentence. Let’s try something slightly different:

We still got the same results! This means that from a relatively small number of examples, you can start building a chatbot that understands many of the variations we see between how each person asks for something.

Try playing around with it for a while using different sentences users might use to ask to turn lights off. If you find one that the chatbot doesn’t understand, teach it by adding it as an example to the turn_off intent and try again. The more iterations you go through, the more robust your chatbot will become 🦾

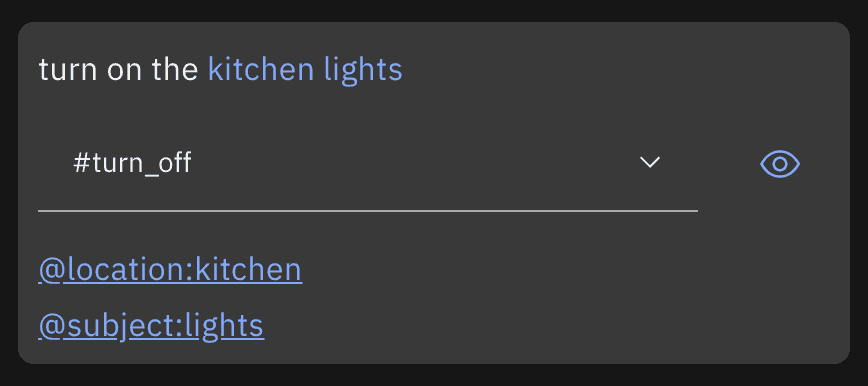

What happens if we ask our model about anything else though? Since we started with a blank model, most things just won’t be understood at all, but even worse, let’s see what happens if we ask it to turn the lights back on:

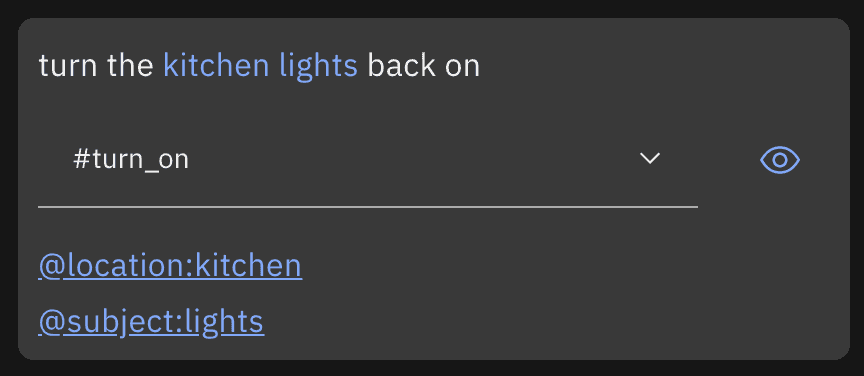

That doesn’t look right 🤔 It still thinks we’re trying to turn the lights off, even though we’re trying to turn them on. Why is that? Our current model only knows about turning things off so to it, this request looks pretty similar to that. Let’s add a turn_on intent as well and see if that makes a difference:

🎉 Now that our model knows about turning things on as well, it can easily distinguish between both intents. Notice how the entities we specified are still correctly identified in these requests as well. That’s because entities aren’t really tied to intents, they will always be recognised whenever they appear in a sentence.

That’s it for Part II. We’ve covered the basics of NLU and created a Watson Assistant skill capable of understanding requests to turn things on and off. In the next and final article of this mini-series, we’ll cover the remaining two steps and show you how you to create a dialog, having your chatbot reply and perform actions based on the user’s requests. If that sounds interesting, stay tuned!

As promised, before we go, here are a few insights & tips related to NLU:

💡 It’s important to understand what scope you want your chatbot to cover before deciding what you’re going to map as an intent or an entity. As we’ve just seen, this can easily make a difference in your dataset’s performance, and it will also impact its capability to evolve.

💡 The more you focus your efforts on the scope you want your chatbot to excel at, the more its users will be pleased with the experience. Just because AI is powering your chatbot’s understanding it doesn’t mean it will be able to understand and chat about everything…and that’s probably not what you want anyway!

💡 Optimising your NLU model might depend on the channel you’re aiming for. For example, voice platforms (e.g. Alexa, Google Assistant) using Speech-to-Text to generate the user’s input, will commonly include wrong words amid sentences that sound alike to the desired word, while text first platforms (e.g. webchat, FB messenger, WhatsApp) will commonly include typos or abbreviations.

💡 Before going live make sure you can track these and have a process in place for improving your intent categorisation. Chatbots improve over time as you train them with more examples from real conversations.

Part III is available here.